Java REST API Benchmark: Tomcat vs Jetty vs Grizzly vs Undertow, Round 2

This is a follow-up to the initial REST/JAX-RS benchmark comparing Tomcat, Jetty, Grizzly and Undertow.

In the previous round where default server configuration was used, the race was led by Grizzly, followed by Jetty, Undertow and finally Tomcat.

In this round, I have set the maximum worker thread pool size to 250 for all 4 containers.

To make this happen, I had to do some code changes for Jetty as well as Grizzly as this was not possible in the original benchmark.

This allowed me to start the container with the thread pool size as a command line parameter.

For more detail about running the tests yourself, please have a look at the github link in the resources section.

Note that here, the test have been run only for 128 concurrent users as from the previous round, the number of concurrent users did not make a big impact

System information

./sysinfo.sh

CPU:

model name : Intel(R) Core(TM) i7-3537U CPU @ 2.00GHz

model name : Intel(R) Core(TM) i7-3537U CPU @ 2.00GHz

model name : Intel(R) Core(TM) i7-3537U CPU @ 2.00GHz

model name : Intel(R) Core(TM) i7-3537U CPU @ 2.00GHz

RAM:

total used free shared buffers cached

Mem: 7.7G 2.5G 5.1G 267M 114M 1.1G

-/+ buffers/cache: 1.4G 6.3G

Swap: 7.9G 280K 7.9G

Java version:

java version "1.8.0_66"

Java(TM) SE Runtime Environment (build 1.8.0_66-b17)

Java HotSpot(TM) 64-Bit Server VM (build 25.66-b17, mixed mode)

OS:

Linux arcad-idea 3.16.0-57-generic #77~14.04.1-Ubuntu SMP Thu Dec 17 23:20:00 UTC 2015 x86_64 x86_64 x86_64 GNU/Linux

Note that here, we have more free ram than in the previous round as I have shut down all running applications.

I also restarted the machine before every single test run

Results

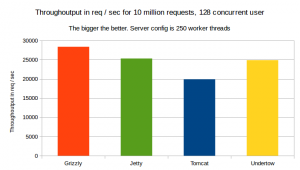

As shown on the graph above, as fas as tough output is concerned, again, Grizzly is far ahead leading the race, followed by Jetty.

Undertow came third very close to Jetty. Then Tomcat came last.

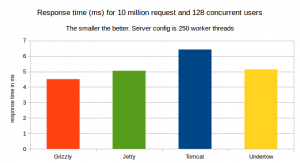

The Response time graph above shows Grizzly ahead in the game, followed by Jetty, Undertow and Tomcat last

Conclusion

I expected Undertow to be the fastest of all. But somehow, this did not happen

The result of this round 2 is very similar to what we have seen in round 1: Grizzly is the fastest container when it comes to serving JAX-RS requests.

Resources

Source code and detailed benchmark results are available at https://github.com/arcadius/java-rest-api-web-container-benchmark